We conducted 3 studies in 3 school districts to evaluate Digital Promise's Dynamic Learning Project (DLP). Below, you can read the research brief summarizing results from all 3 studies. You can also download this research brief or the research design memo for this project as a printable PDFs using the blue buttons above.

Valeriy Lazarev

Adam Schellinger

Jenna Zacamy

Empirical Education conducted rapid-cycle evaluations (RCEs) in three districts in the south central and south eastern United States, henceforth referred to as Districts A, B, and C. Each study evaluated the impact of DLP coaching on student achievement. The research questions, samples, and analytic methods varied between districts, due to the availability of data.

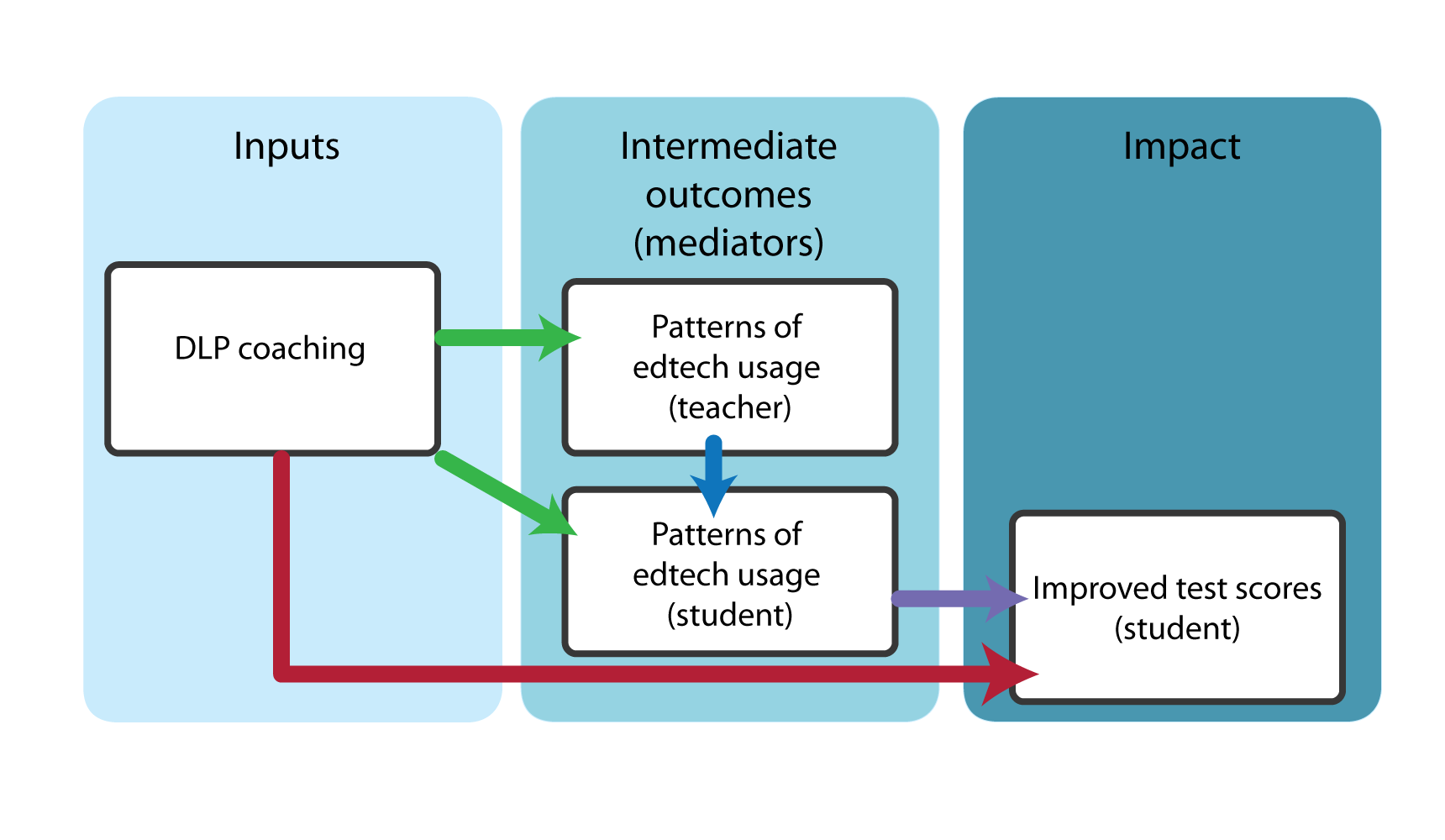

Using available data, we addressed five research questions developed from the DLP logic model (Figure 1 is a simplified version of the DLP logic model with color-coded arrows representing the research questions listed below).

RQ1. What is the impact of DLP coaching on edtech usage for (a) teachers and (b) students?

RQ2. Is there a correlation between teacher and student patterns of edtech usage?

RQ3. Did students whose teachers received DLP coaching perform better on assessments in (a) math, (b) ELA, and/or (c) writing than students whose teachers did not participate?

RQ4. Is the impact of DLP coaching on student achievement different for students with different characteristics (such as incoming achievement levels, socioeconomic status, English learner status)?

RQ5. Is there a correlation between the patterns of student edtech usage and their test scores?

Digital Promise was responsible for collecting demographic, roster, and achievement data from the districts and providing de-identified data to Empirical Education for analysis. Hapara was identified as an available source of technology usage data in Districts B and C; their analytics platform aggregates Google Suite (G Suite) usage by students and teachers across the school year. Digital Promise provided coaching records and pre/post-implementation surveys from teachers. Table 1 presents the research questions addressed and grade levels used in the analysis for each district.

| Research questions | District A | District B | District C |

|---|---|---|---|

| RQ1 – Teachers | n/a a | 6–8 | 3–12 |

| RQ1 – Students | n/a a | 6–8 | 3–5 |

| RQ2 | n/a a | 6–8 | 3–5 |

| RQ3 | 6–8 | 6–8 | 4–5 |

| RQ4 | 6–8 | 6–8 | 4–5 |

| RQ5 | n/a a | 6–8 | 4–8, 11 |

a Edtech usage data was not available in District A, prohibiting analysis of RQ1, RQ2, and RQ5.

In all three districts, we found evidence of positive impacts of DLP coaching on student achievement in math and ELA. These findings, across three districts in three states with different outcome measures and varying grade levels, demonstrate the promise of the DLP coaching model. We also found positive impacts of DLP coaching on teacher and student G Suite usage, as measured by Hapara, in Districts B and C, particularly for metrics that captured digital interactions between students and teachers. We see the impact of DLP coaching on both the short-term and long-term outcomes hypothesized in the logic model, as well as some evidence that the associations between teacher and student edtech outcomes and then between student edtech usage and student outcomes thought to mediate these outcomes are positively oriented. We highlight the following findings in each district.1

When considered as pilot studies, these three RCEs demonstrate an important first step in the research base for the DLP coaching program.

Examining multiple levels of outcomes and their linkages is crucial to identifying improvements to the implementation model. We found evidence of significant impacts of DLP coaching at all levels—driving increased edtech usage by teachers and students, and more importantly improved student outcomes—but some of the linkages were weak overall. These may be due to measurement limitations of the available edtech usage data or complications in the available samples of teachers and students. Identifying a balanced comparison group is crucial for this type of quasi-experimental study. As teachers were free to choose their level of participation in DLP coaching, we expected to see evidence of selection bias; in Districts B and C, we saw bias in varying directions. Such scenarios are easy to imagine: teachers who are already proficient and interested in edtech may self-select into coaching, or school/district mandates may opt those with less prior exposure to technology into such programs. We can control for prior-year usage, but a valid, reliable baseline survey of all teachers—regardless of their participation in coaching—could reveal additional, important covariates for matching.

Capturing the full variation of the amount of coaching through a digital dashboard would permit analysis showing the ROI of additional coaching. There were two measures of coaching collected: logs completed by the coaches, and teachers’ self-report on post-surveys identifying the coaching cycles they participated in. These measures often conflicted, and the amount of coaching was coded from a survey question asking teachers to report a range for the average weekly amount of coaching in half-hour to one-hour increments.

Hapara Analytics provided aggregate G Suite usage metrics over the school year for both students and teachers, but this was not broken down by class or subject. In the middle school populations studied, teachers often taught multiple subjects, and student usage would be expected to vary between subjects. More precise impacts could be obtained if G Suite usage could be narrowed to specific classes within a teacher and/or student, or from more targeted edtech usage data—from single sign-on (SSO) platforms—such as the number of logins and achievements gained in subject-specific math or ELA applications (which were not available for this study).

Apart from the findings in District A relating to students with disabilities and varying impacts by grade level, there were few significant differential impacts for different student populations. Many promising edtech programs can be associated with widening, rather than correcting the digital divide, but we see little evidence that DLP coaching favors any particular subgroup of students. This should continue to be an area of investigation for future studies; districts focused on equity and inclusion must ensure that edtech is adopted broadly across teacher and student populations.

Reference this research brief: Lazarev, V., Schellinger, A., & Zacamy, J. (2021). Rapid Cycle Evaluations of the Dynamic Learning Project in 3 Districts (Research Brief). Empirical Education Inc. https://www.empiricaleducation.com/digital-promise