Presenting at AERA 2018

We will again be presenting at the annual meeting of the American Educational Research Association (AERA). Join the Empirical Education team in New York City from April 13-17, 2018.

Research presentations will include the following.

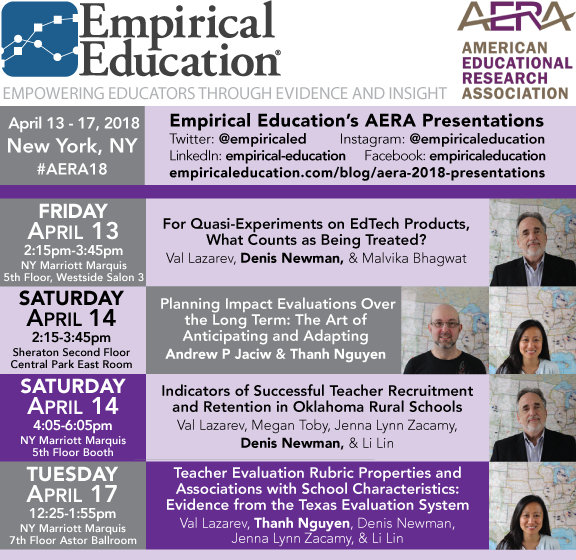

For Quasi-Experiments on EdTech Products, What Counts as Being Treated?

Authors: Val Lazarev, Denis Newman, & Malvika Bhagwat

In Roundtable Session: Examining the Impact of Accountability Systems on Both Teachers and Students

Friday, April 13 - 2:15 to 3:45pm

New York Marriott Marquis, Fifth Floor, Westside Ballroom Salon 3

Abstract: Edtech products are becoming increasingly prevalent in K-12 schools and the needs of schools to evaluate their value for students calls for a program of rigorous research, at least at the level 2 of the ESSA standards for evidence. This paper draws on our experience conducting a large scale quasi-experiment in California schools. The nature of the product’s wide-ranging intensity of implementation presented a challenge in identifying schools that had used the product adequately enough to be considered part of the treatment group.

Planning Impact Evaluations Over the Long Term: The Art of Anticipating and Adapting

Authors: Andrew P Jaciw & Thanh Thi Nguyen

In Session: The Challenges and Successes of Conducting Large-Scale Educational Research

Saturday, April 14 - 2:15 to 3:45pm

Sheraton New York Times Square, Second Floor, Central Park East Room

Abstract: Perspective. It is good practice to identify core research questions and important elements of study designs a-priori, to prevent post-hoc “fishing” exercises and reduce the role of drawing false-positive conclusions [16,19]. However, programs in education, and evaluations of them, evolve [6] making it difficult to follow a charted course. For example, in the lifetime of a program and its evaluation, new curricular content or evidence standards for evaluations may be introduced and thus drive changes in program implementation and evaluation.

Objectives. This work presents three cases from program impact evaluations conducted through the Department of Education. In each case, unanticipated results or changes in study context had significant consequences for program recipients, developers and evaluators. We discuss responses, either enacted or envisioned, for addressing these challenges. The work is intended to serve as a practical guide for researchers and evaluators who encounter similar issues.

Methods/Data Sources/Results. The first case concerns the problem of outcome measures keeping pace with evolving content standards. For example, in assessing impacts of science programs, program developers and evaluators are challenged to find assessments that align with Next Generation Science Standards (NGSS). Existing NGSS-aligned assessments are largely untested or in development, resulting in the evaluator having to find, adapt or develop instruments with strong reliability, and construct and face validity – ones that will be accepted by independent review and not considered over-aligned to the interventions. We describe a hands-on approach to working with a state testing agency to develop forms to assess impacts on science generally, and on constructs more-specifically aligned to the program evaluated. The second case concerns the problem of reprioritizing research questions mid-study. As noted above, researchers often identify primary (confirmatory) research questions at the outset of a study. Such questions are held to high evidence standards, and are differentiated from exploratory questions, which often originate after examining the data, and must be replicated to be considered reliable [16]. However, sometimes, exploratory analyses produce unanticipated results that may be highly consequential. The evaluator must grapple with the dilemma of whether to re-prioritize the result, or attempt to proceed with replication. We discuss this issue with reference to an RCT in which the dilemma arose. The third addresses the problem of designing and implementing a study that meets one set of evidence standards, when the results will be reviewed according to a later version of those standards. A practical question is what to do when this happens and consequently the study falls under a lower tier of the new evidence standard. With reference to an actual case, we consider several response options, including assessing the consequence of this reclassification for future funding of the program, and augmenting the research design to satisfy the new standards of evidence.

Significance. Responding to demands of changing contexts, programs in the social sciences are moving targets. They demand a flexible but well-reasoned and justified approach to evaluation. This session provides practical examples and is intended to promote discussion for generating solutions to challenges of this kind.

Indicators of Successful Teacher Recruitment and Retention in Oklahoma Rural Schools

Authors: Val Lazarev, Megan Toby, Jenna Lynn Zacamy, Denis Newman, & Li Lin

In Session: Teacher Effectiveness, Retention, and Coaching

Saturday, April 14 - 4:05 to 6:05pm

New York Marriott Marquis, Fifth Floor, Booth

Abstract: The purpose of this study was to identify factors associated with successful recruitment and retention of teachers in Oklahoma rural school districts, in order to highlight potential strategies to address Oklahoma’s teaching shortage. The study was designed to identify teacher-level, district-level, and community characteristics that predict which teachers are most likely to be successfully recruited and retained. A key finding is that for teachers in rural schools, total compensation and increased responsibilities in job assignment are positively associated with successful recruitment and retention. Evidence provided by this study can be used to inform incentive schemes to help retain certain groups of teachers and increase retention rates overall.

Teacher Evaluation Rubric Properties and Associations with School Characteristics: Evidence from the Texas Evaluation System

Authors: Val Lazarev, Thanh Thi Nguyen, Denis Newman, Jenna Lynn Zacamy, Li Lin

In Session: Teacher Evaluation Under the Microscope

Tuesday, April 17 - 12:25 to 1:55pm

New York Marriott Marquis, Seventh Floor, Astor Ballroom

Abstract: A 2009 seminal report, The Widget Effect, alerted the nation to the tendency of traditional teacher evaluation systems to treat teachers like widgets, undifferentiated in their level of effectiveness. Since then, a growing body of research, coupled with new federal initiatives, has catalyzed the reform of such systems. In 2014-15, Texas piloted its reformed evaluation system, collecting classroom observation rubric ratings from over 8000 teachers across 51 school districts. This study analyzed that large dataset and found that 26.5 percent, compared to 2 percent under previous measures, of teachers were rated below proficient. The study also found a promising indication of low bias in the rubric ratings stemming from school characteristics, given that they were minimally associated with observation ratings.

We look forward to seeing you at our sessions to discuss our research. We’re also co-hosting a cocktail reception with Division H! If you’d like an invite, let us know.