New Mexico Implementation

Empirical Education and the New Mexico Public Education Department (NMPED) are entering into their fourth year of collaboration using Observation Engine to increase educator effectiveness by improving understanding of the NMTEACH observation protocol and inter-rater reliability amongst observers using it. During the implementation, Observation Engine has been used for calibration and professional development with over 2,000 educators across the state annually. In partnership with the Southern Regional Education Board (SREB), who is providing training on best practices, the users in New Mexico have pushed the boundaries of what is possible with Observation Engine. Observation Engine was initially used solely for certifying observers prior to live classroom observations. Now, observers are relying on Observation Engine’s lesson functionality to provide professional development throughout the year. In addition, some administrators are now using videos and content from Observation Engine directly with teachers to provide them with models of what good instruction looks like.

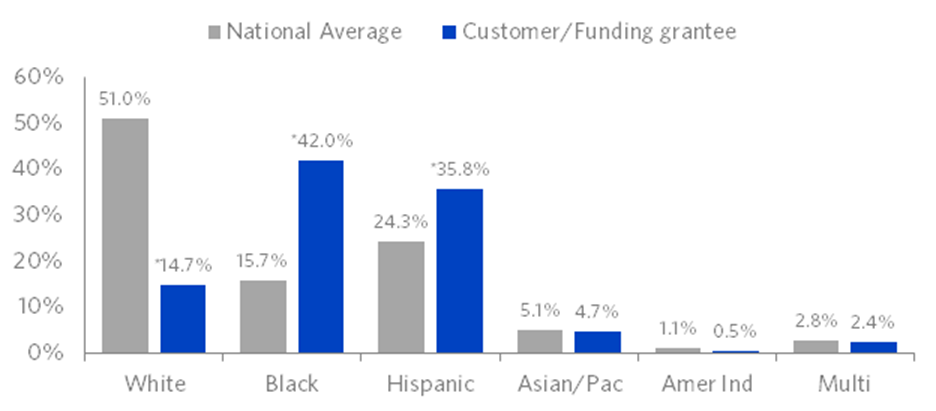

The exciting news is that the collaborative efforts of NMPED, SREB, and Observation Engine are demonstrating impressive results across New Mexico that are noteworthy, especially when compared to the rest of the nation. In a compilation of teacher performance ratings from 19 states that have reformed their evaluation system since the seminal Widget Effect Report, Kraft and Gilmour (2016) found that in a majority of these states, fewer than 3 percent of teachers are rated below proficient. New Mexico stood out as an outlier among these states with 26.2% of teachers rated below proficient, a percentage comparable with more realistic pilots of educator effectiveness ratings. This is likely a sign of excellent professional development, as well as a willingness to realistically adjust the thresholds for proficiency based on the data that is being yielded and examined from actual practice, such as data captured within Observation Engine.

Kraft, M.A., & Gilmour, A.F. (2016). Revisiting the Widget Effect: Teacher Evaluation Reforms and the Distribution of Teacher Effectiveness. Brown University working paper. Retrieved July 21, 2016, from https://scholar.harvard.edu/mkraft/publications/revisiting-widget-effect-teacher-evaluation-reforms-and-distribution-teacher.