SREE 2024: On a Mission to Deepen my Quant and Equity Perspectives

I am about to get on the plane to SREE

I am excited, but also somewhat nervous.

Why?

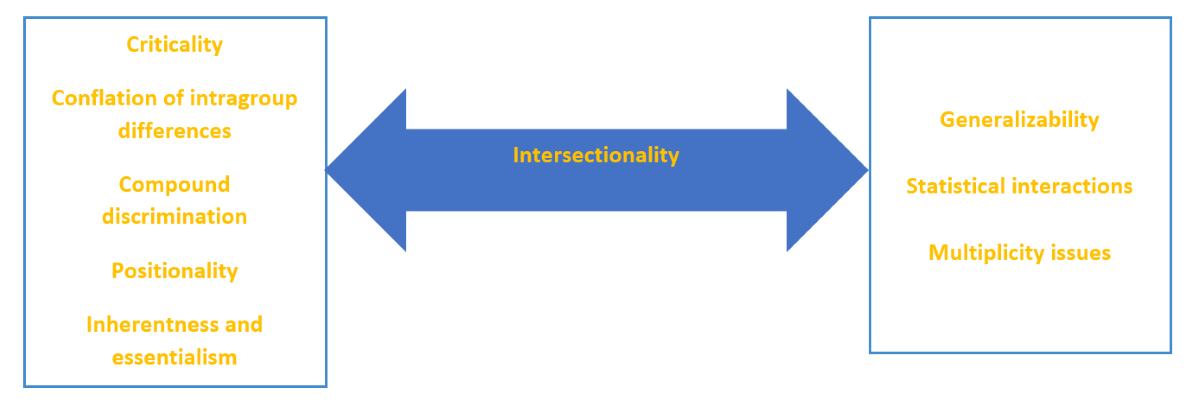

I'm excited to immerse myself in the conference – my goal is to try to straddle paradigms of criticality, and the quant tradition. SREE historically has championed empirical findings using rigorous statistical methods.

I'm excited because I will be discussing intersectionality – a topic of interest that emerged from attending a series of Critical Perspectives webinars hosted by SREE in the last few years. I want to try to pay it back by moving the conversation forward and contributing to the critical discussion.

I'm nervousbecause the topic of intersectionality is new for me. The idea cuts across many areas - law, sociology, epidemiology, education. It’s a vast subject area with various literature streams. I am new to it. It also gets at social justice issues that I am not used to talking about, and I want to express those clearly and accurately. I understand the power and privilege of my words and presentation and want the audience to continue to inquire and move the conversation forward.

I'm nervousBecause issues of quantitative criticality require a person to confront their deeper philosophical commitments, assumptions, and theory of knowledge (epistemology). I have no problem with that; however, a few of my experimentalist colleagues have expressed a deep resistance to philosophy. One described it as merely a “throat clearing exercise”. (I wonder: Will those with a positivist bent leave my talk in droves?)

What is intersectionality anyways, and why was I attracted to the idea? It originates in the legal-scholarly work of Kimberle Crenshaw. She describes a court case filed against GM:

"In DeGraffenreid, the court refused to recognize the possibility of compound discrimination against Black women and analyzed their claim using the employment of white women as the historical base. As a consequence, the employment experiences of white women obscured the distinct discrimination that Black women experienced."

The courts refusal to "acknowledge that Black women encounter combined race and sex discrimination implies that the boundaries of sex and race discrimination doctrine are defined respectively by white women's and Black men's experiences."

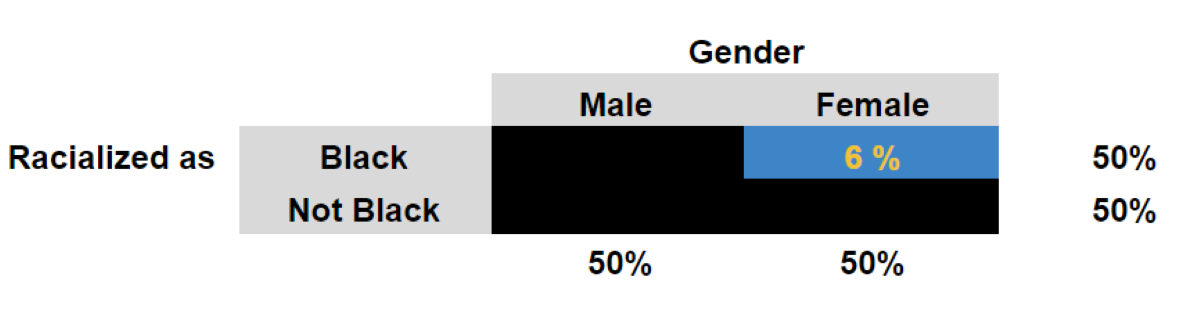

The justices refused to recognize that hiring practices by GM compounded discrimination across specific intersections of socially-recognized categories (i.e., Black women). The issue is obvious but can be made concrete with an example. Imagine the following distribution of equally-qualified candidates. The court judgment would not have recognized the following situation of compound discrimination:

Why did intersectionality spike my interest in the first place? In the course of the SREE Critical Perspectives seminars, it occurred to me that intersectionality was a concept that bridged what I know with what I want to know.

I like representing problems and opportunities in education in quantitative terms. I use models. However, I also prioritize understanding of the limits of our models, with reality serving as the ultimate check of the validity of the representation. Intersectionality, as a concept, pits out standard models against a reality that is both complex and socially urgent.

Intersectionality as a bridge:

Intersectionality presents an opportunity to reconcile two worlds, which is a welcome puzzle to work on.

Here’s how I organized my talk. (See the postscript for how it went.)

- My positionality: I discussed my background "where I am coming from": including that most of my training is in quant methods, that I am interested in problems of causal generalizability, that I don’t shy away from philosophy, and that my children are racialized as mixed-race and their status inspired my first hypothetical example.

- I summarized intersectionality as originally conceived. I reviewed the idea as it was developed by Crenshaw.

- I reviewed some of the developments in intersectionality among quantitative researchers who describe their work and approaches as "quantitative intersectionality".

- I explored an extension of the idea of intersectionality through the concept of "unique to group" variables: I argued for the need to diversify our models of outcomes and impacts to take into account moderators of impact that are relevant to only specific groups and that respect the uniqueness of their experiences. (I will discuss this more in another blog that is soon to come.)

- I provided two examples, one hypothetical, and one real that clarified what I mean by the role of "unique to group" variables.

- I summarized the lessons.

There were some other exceptional talks that I attended at SREE, including:

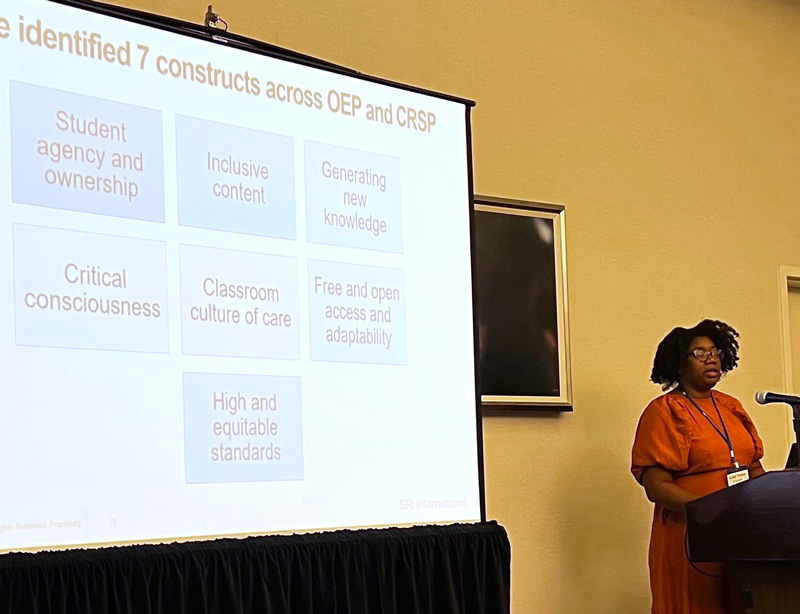

- Promoting and Defending Critical Work: Navigating Professional Challenges and Perceptions

- Equity by Design: Integrating Criticality Principles in Special Education Research

- An excellent Hedges Lecture by Neil A. Lewis "Sharing What we Know (and What Isn’t So) to Improve Equity in Education"

- Design and Analysis for Causal Inferences in and across Studies

Postscript: How it went!

The other three talks in the session in which I presented (Unpacking Heterogeneous Effects: Methodological Innovations in Educational Research) were excellent. They included a work by Peter Halpin on a topic that I have been puzzled by for a while, specifically, how item-level information can be leveraged to assess program impacts. We almost always assess impacts on scale scores from “ready-made” tests that are based on calibrations of item-level scores. In an experiment one effectively introduces variance into a testing situation and I have wondered what it means for impacts to register at the item level, because each item-level effect will likely interact with the treatment effect. So “hats off” to linking psychometrics and construct validity to discussion of impacts.

As for my presentation, I was deeply moved by the sentiments that were expressed by several conference goers who came up to me afterwards. One comment was "you are on the right track". Others voiced an appreciation for my addressing the topic. I did feel THE BRIDGING between paradigms that I hoped to at least set in motion. This was especially true when one of the other presenters in the session, who had addressed the topic of effect heterogeneity across studies, commented: “Wow, you’re talking about some of the very same things that I am thinking”. It felt good to know that this convergence happened in spite of the fact that the two talks could be seen as very different at the surface level. (And no, people did not leave in droves.)

Thank you Baltimore! I feel more motivated than ever. Thank you SREE organizers and participants.

Treating myself afterwards…

A special shoutout to Jose Blackorby. In the end, I did hang up my tie. But I haven’t given up on the idea – just need to find one from a hot pink or aqua blue palette.