AERA 2018 Recap: The Possibilities and Necessity of a Rigorous Education Research Community

This year’s AERA annual meeting on “The Dreams, Possibilities, and Necessity of Public Education,” was fittingly held in the city with the largest number of public school students in the country—New York. Against this radically diverse backdrop, presenters were encouraged to diversify both the format and topics of presentations in order to inspire thinking and “confront the struggles for public education.”

AERA’s sheer size may risk overwhelming its attendees, but in other ways, it came as a relief. At a time when educators and education remain under-resourced, it was heartening to be reminded that a large, vibrant community of dedicated and intelligent people exists to improve educational opportunities for all students.

One theme that particularly stood out is that researchers are finding increasingly creative ways to use existing usage data from education technology products to measure impact and implementation. This is a good thing when it comes to reducing the cost of research and making it more accessible to smaller businesses and nonprofits. For example, in a presentation on a software-based knowledge competition for nursing students, researchers used usage data to identify components of player styles and determine whether these styles had a significant effect on student performance. In our Edtech Research Guidelines, Empirical similarly recommends that edtech companies take advantage of their existing usage data to run impact and implementation analyses, without using more expensive data collection methods. This can help significantly reduce the cost of research studies—rather than one study that costs $3 million, companies can consider multiple lower-cost studies that leverage usage data and give the company a picture of how the product performs in a greater diversity of contexts.

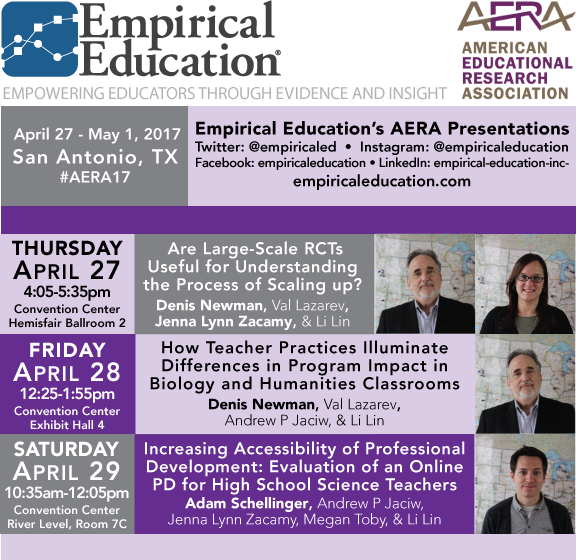

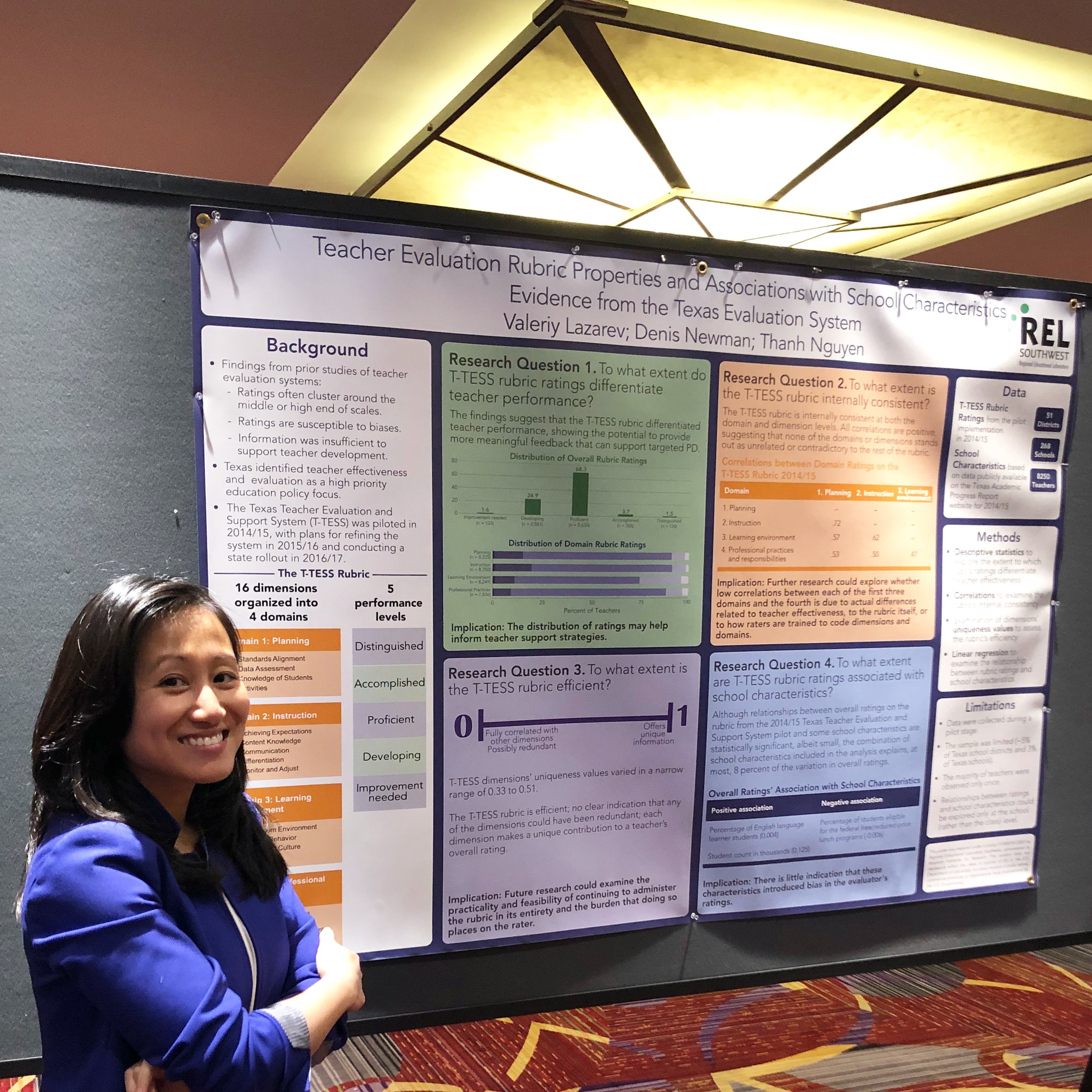

Empirical staff themselves presented on a variety of topics, including quasi-experiments on edtech products; teacher recruitment, evaluation, and retention; and long-term impact evaluations. In all cases, Empirical reinforced its commitment to innovative, low-cost, and rigorous research. You can read more about the research projects we presented in our previous AERA post.

Finally, Empirical was delighted to co-host the Division H AERA Reception at the Supernova bar at Novotel Hotel. If you ever wondered if Empirical knows how to throw a party, wonder no more! A few pictures from the event are below. View all of the pictures from our event on facebook!

We had a great time and look forward to seeing everyone at the next AERA annual meeting!