On February 21, 2012 the U.S. Department of Education released the final report of an experiment that Empirical Education has been working on for the last six years. The report, titled Evaluation of the Effectiveness of the Alabama Math, Science, and Technology Initiative (AMSTI) is now available on the Institute of Education Sciences website. The Alabama State Department of Education held a press conference to announce the findings, attended by Superintendent of Education Bice, staff of AMSTI, along with educators, students, and co-principal investigator of the study, Denis Newman, CEO of Empirical Education.

AMSTI was developed by the state of Alabama and introduced in 2002 with the goal of improving mathematics and science achievement in the state’s K-12 schools. Empirical Education was primarily responsible for conducting the study—including the design, data collection, analysis, and reporting—under its subcontract with the Regional Education Lab, Southeast (the study was initiated through a research grant to Empirical). Researchers from Academy of Education Development, Abt Associates, and ANALYTICA made important contributions to design, analysis and data collection.

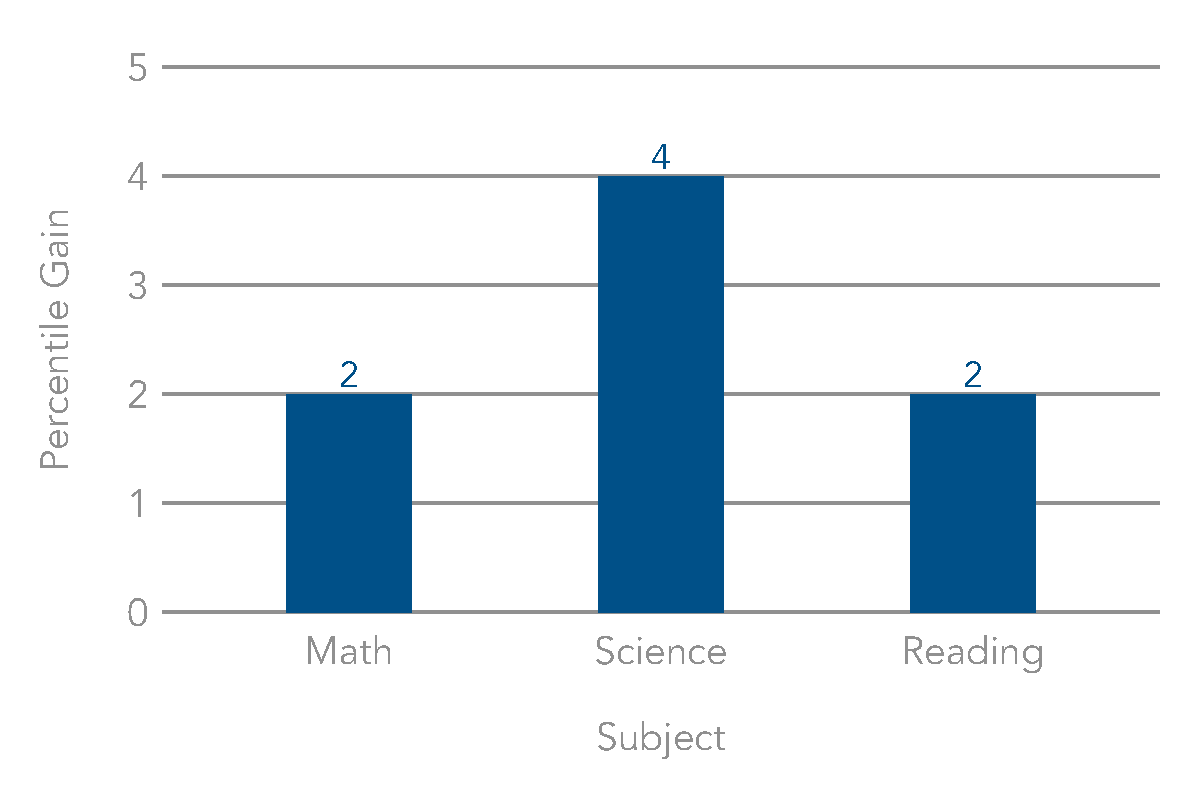

The findings show that after one year, students in the 41 AMSTI schools experienced an impact on mathematics achievement equivalent to 28 days of additional student progress over students receiving conventional mathematics instruction. The study found, after one year, no difference for science achievement. It also found that AMSTI had an impact on teachers’ active learning classroom practices in math and science that, according to the theory of action posited by AMSTI, should have an impact on achievement. Further exploratory analysis found effects for student achievement in both mathematics and science after two years. The study also explored reading achievement, where it found significant differences between the AMSTI and control groups after one year. Exploration of differential effect for student demographic categories found consistent results for gender, socio-economic status, and pretest achievement level for math and science. For reading, however, the breakdown by student ethnicity suggests a differential benefit.

Just about everybody at Empirical worked on this project at one point or another. Besides the three of us (Newman, Jaciw and Zacamy) who are listed among the authors, we want to acknowledge past and current employees whose efforts made the project possible: Jessica Cabalo, Ruthie Chang, Zach Chin, Huan Cung, Dan Ho, Akiko Lipton, Boya Ma, Robin Means, Gloria Miller, Bob Smith, Laurel Sterling, Qingfeng Zhao, Xiaohui Zheng, and Margit Zsolnay.

With solid cooperation of the state’s Department of Education and the AMSTI team, approximately 780 teachers and 30,000 upper-elementary and middle school students in 82 schools from five regions in Alabama participated in the study. The schools were randomized into one of two categories: 1) Those who received AMSTI starting the first year, or 2) Those who received “business as usual” the first year and began participation in AMSTI the second year. With only a one-year delay before the control group entered treatment, the two-year impact was estimated using statistical techniques developed by, and with the assistance of our colleagues at Abt Associates. Academy for Education Development assisted with data collection and analysis of training and program implementation.

Findings of the AMSTI study will also be presented at the Society for Research on Educational Effectiveness (SREE) Spring Conference taking place in Washington D.C. from March 8-10, 2012. Join Denis Newman, Andrew Jaciw, and Boya Ma on Friday March 9, 2012 from 3:00pm-4:30pm, when they will present findings of their study titled, “Locating Differential Effectiveness of a STEM Initiative through Exploration of Moderators.” A symposium on the study, including the major study collaborators, will be presented at the annual conference of the American Educational Research Association (AERA) on April 15, 2012 from 2:15pm-3:45pm at the Marriott Pinnacle ⁄ Pinnacle III in Vancouver, Canada. This session will be chaired by Ludy van Broekhuizen (director of REL-SE) and will include presentations by Steve Ricks (director of AMSTI); Jean Scott (SERVE Center at UNCG); Denis Newman, Andrew Jaciw, Boya Ma, and Jenna Zacamy (Empirical Education); Steve Bell (Abt Associates); and Laura Gould (formerly of AED). Sean Reardon (Stanford) will serve as the discussant. A synopsis of the study will also be included in the Common Guidelines for Education Research and Development.