AERA 2022 Annual Meeting

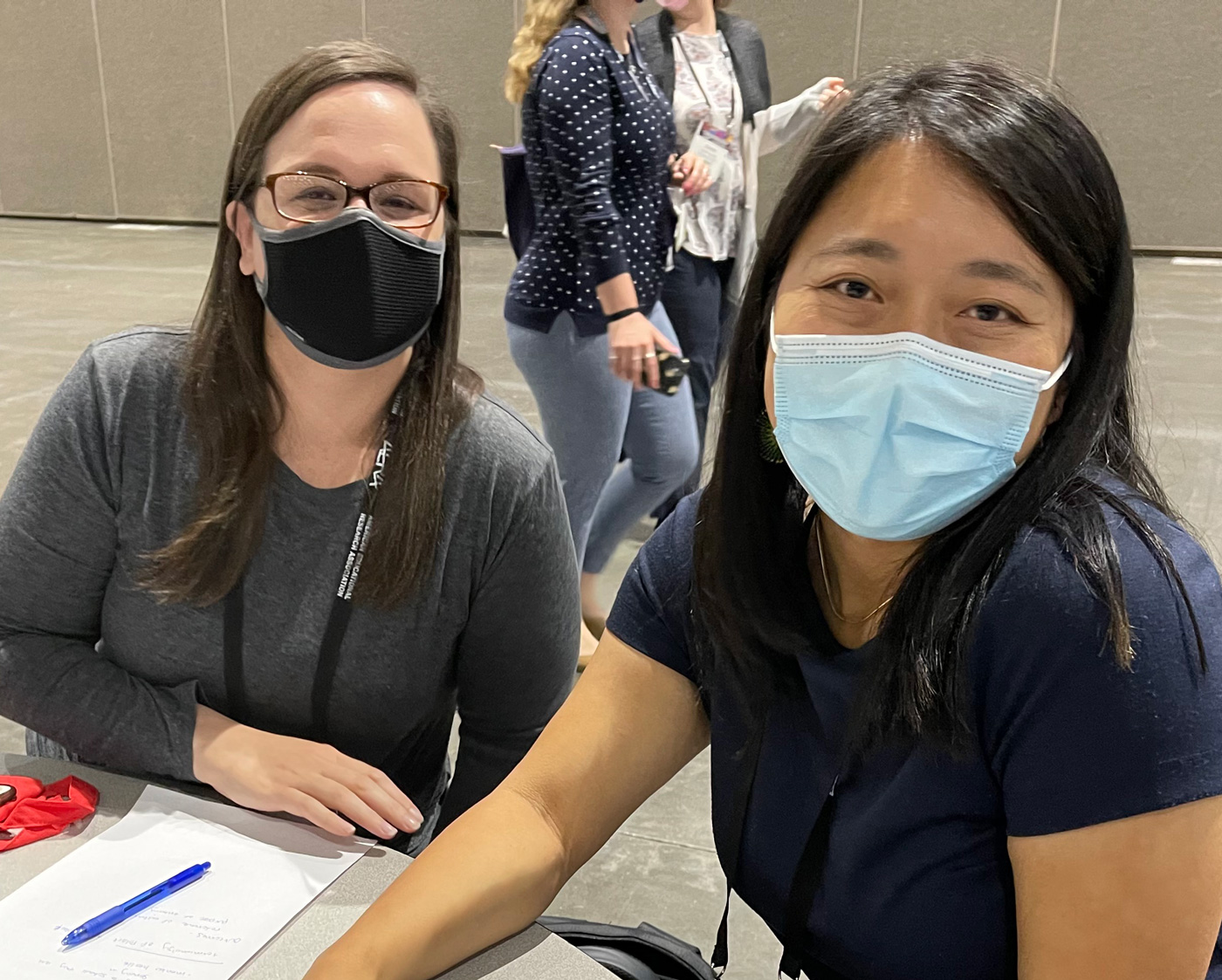

We really enjoyed being back in-person at AERA for our first time in a few years. We missed that interaction and collaboration that can truly only come from talking and engaging in person. The theme this year—Cultivating Equitable Education Systems for the 21st Century—is highly aligned with our company goals, and we value and appreciate the extraordinary work of so many researchers and practitioners who are dedicated to discovering equitable educational solutions.

We met some of the team from ICPSR, the Inter-university Consortium for Political and Social Research, and had a chance to learn about their guide to social science data preparation and archiving. We attended too many presentations to talk about so we’ll highlight a few below that stood out to us.

Thursday, April 21, 2022

On Thursday, Sze-Shun Lau and Jenna Zacamy presented the impacts of Collaboration and Reflection to Enhance Atlanta Teacher Effectiveness (CREATE) on the continuous retention of teachers through their second year. The presentation was part of a roundtable discussion with Jacob Elmore, Dirk Richter, Eric Richter, Christin Lucksnat, and Stefanie Marshall.

It was a pleasure to hear about the work coming out of the University of Potsdam around examining the connections between extraversion levels of alternatively-certified teachers and their job satisfaction and student achievement, and about opportunities for early-career teachers at the University of Minnesota to be part of learning communities with whom they can openly discuss racialized matters in school settings and develop their racial consciousness. We also had the opportunity to engage in conversation with our fellow presenters about constructive supports for early-career teachers that place value on the experiences and motivating factors they bring to the table, and other commonalities in our work aiming to increase retention of teachers in diverse contexts.

Friday, April 22, 2022

Our favorite session we attended on Friday was a paper session titled Critical and Transformative Perspectives on Social and Emotional Learning. In this session, Dr. Dena Simmons, shared her paper titled Educator Freedom Dreams: Humanizing Social and Emotional Learning Through Racial Justice and talked about SEL as an approach to alleviate the stressors of systemic racism from a Critical Race Theory education perspective.

We tweeted about it from AERA.

"We cannot SEL away racism - we need structural solutions to structural problems." - @DenaSimmons at @AERA_EdResearch today.#AERA22 #AERA2022 pic.twitter.com/f36OIsWWTM

— Empirical Education (@empiricaled) April 22, 2022

Another interesting session from Friday was about the future of IES research. Jenna sat in on a small group discussion around the proposed future topic areas of IES competitions. We are most interested in if/how IES will implement the recommendation to have a “systematic, periodic, and transparent process for analyzing the state of the field and adding or removing topics as appropriate”.

Saturday, April 23, 2022

On Saturday morning, there was a symposium titled Revolutionary Love: The Role of Antiracism in Affirming the Literacies of Black and Latinx Elementary Youth. The speakers talked about the three tenants of providing thick, revolutionary love to students: believing, knowing, and doing.

Saturday afternoon, in a presidential session titled Beyond Stopping Hate: Cultivating Safe, Equitable and Affirming Educational Spaces for Asian/Asian American Students, we heard CSU Assistant Professor Edward Curammeng give crucial advice to researchers: “We need to read outside our fields, we need to re-read what we think we’ve already read, and we need to engage Asian American voices in our research.”

After our weekend at AERA, we returned home refreshed and thinking about the importance of making sure students and teachers see themselves in their school contexts - Dr. Simmons provided a crucial reminder that remaining neutral and failing to integrate the sociopolitical contexts of educational issues only furthers erasure. As our evaluation of CREATE continues, we plan to incorporate some of the great feedback we received at our roundtable session, including further exploring the motivation that led our study participants to enter the teaching profession, and how their work with CREATE adds fuel to those motivations.

Did you attend the annual AERA meeting this year? Tell us about your favorite session or something that got you thinking.